Will I shock you, dear reader, if I tell you that physics has a gender problem? Women are underrepresented in physics at every career stratum. Whether you look at undergraduate students or faculty, it’s mostly dudes.

Since we’re talking about gender today, I’ll say right up front that with a few notable exceptions, lots of the data out there about STEM & gender assumes that there are only two genders. My nonbinary folks out there: we see you. Maybe one day the data will see you too.

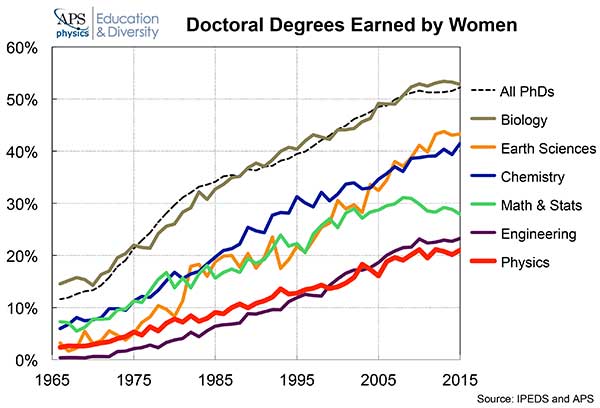

Women make up about 50% of the population, so you don’t have to do much work to see how screwed up our ratios are. As of 2015, women made up 20% of all physics majors and only about 10% of all tenured physics faculty. You can look at other metrics and see the same story. Holman, Stuart-Fox, and Hauser just published a study in PLOS suggesting that at the rate we’re going, it will take 256 years before women in physics are published at parity with men.

There’s no shortage of work that tries to explain the sources of this imbalance, and the forefront of this research is an interesting place to watch. If you want to understand a complex problem though, it’s a good move to break it up into little sub-problems. So some folks study the role that family care plays in this imbalance, or overt sexism in the workplace. Today’s post is about gender bias in STEM hiring. A few meetings ago, our Inclusivity Journal Club read and discussed “Science faculty’s subtle gender biases favor male students” by Moss-Racusin et al. Here’s a link to the article and the supplemental material.

The authors put together a job application package for a fictional aspiring lab manager and sent it to 127 physics, biology, and chemistry professors. These application packages were all identical, except that for half of them the candidate was named John, and for half of them the candidate was named Jennifer. The professors were asked to rate the competence and hireability of this fictional candidate, to rate their own willingness to mentor this candidate, and to propose a starting salary for the position.

I bet you can guess what happened!

What happened was that the male version of this candidate was rated significantly better across all metrics, and the average proposed starting salary for male candidates was about $4k higher. Maybe a little more surprising: this bias towards male applicants was about the same, regardless of the gender of the person doing the evaluation.

This study gets a lot of play in the popular science press, and for good reason. It’s hard to do a double-blind study on gender bias! Or bias of any kind, really. It’s an interesting exercise to try and design a study like this in your head. Would you just give someone a survey asking whether they’re biased?

Since lots of people have written about this paper already, I’ll focus on our group’s reaction. I noticed the mood of the room move through a couple distinct phases; maybe you’ll recognize those phases in yourself when you read the paper, too. We started off feeling collectively angry and disappointed on behalf of all women scientists. Then, I’m not sure what motivated this, but the mood shifted and we all took turns being very critical. And then once that subsided, there was a self-reflective phase where we all did a little personal growth.

First phase: Sexism is stupid and dumb, and the paper shows its effects to you pretty clearly, with easy-to-understand graphs. It’s no wonder we started off feeling angry.

Next phase: the knives came out. Once we had a handle on the basics of the paper, we started picking at it in the way that physicists are famous and famously mocked for doing. Put more charitably, critical engagement is an important intellectual step and some nerds regard it as a way of showing respect. Our critical engagement looked kind of like this:

- The physicists in the room, used to using terabyte-scale data sets for high-energy collider analysis, were all a little scandalized that this study relied on so few data points. Only 127 respondents? To us, that seemed like too small a number to do anything really cool. (Our social scientist pal convinced us it was ok.)

- We took turns noticing that 78% of the respondents were male, and 81% were White. Did this further skew the results? Or is this just “the way it is” in the sciences, making the study actually more representative?

- In the real world, lots of hiring gets done by committees, in part to help reduce the effects of individual bias. How would the results change if, instead of individual professors doing this rating, it was done by groups?

- We all had a hard time understanding how these results would translate into actual hiring outcomes. (Maybe you can tell that we started asking quite a lot from this one little five-page paper.)

- Why weren’t the respondents given any open-ended questions?

- How would the results change if the fictional applicant was going for a tenure-track job? Would prospective long-term colleagues be evaluated differently from low-level, more transient positions?

- It would have been interesting to see how geography influenced responses. For example, $25k per year goes a lot farther in St. Louis than it does in San Francisco. Tying the proposed salary to local cost-of-living would have been a neat addition with maybe some explanatory power.

So we went through the phases of appreciating and then being critical of the paper. After we’d gotten all that “critical engagement” out of our systems, we got a little self-aware. Physicists’ papers are built around very specific, narrow arguments. Why should we expect anything different from sociologists? Maybe this paper is not iron-clad proof of pan-institutional sexism, but why should we expect it to be? Moss-Racusin et al. asked a very narrow question, and gave us a very specific answer. That’s why the study is compelling.

There’s even scholarship on the way people react to papers about bias. It’s fascinating! The argument goes like this: Objectivity is a culturally desirable trait in STEM. Studies that discuss bias are very triggering for a particular kind of person who enjoys thinking of themselves as an ice-cold rational scholar. Such people (men more often than women) tend to work extra-hard to trash studies about bias in STEM. Were we all collectively doing this?

Real talk: I caught myself getting a little mentally defensive during this discussion. “I would do better than these other respondents. I’m much more aware of my own biases.” That kind of defensive thinking is part of the problem. So this is one of the reasons I ended up liking this paper so much. I learned something about bias in STEM and about they way social scientists roll, sure. But I also got pushed into being a little more self-aware. Not bad for an hour’s work, huh?

Stay tuned as I get caught up on these blog posts! Next up: Devine et al.‘s “Long-term reduction in implicit race bias: A prejudice habit-breaking intervention”.